I’m trying to find an explanation for my preference for center speaker vs. phantom center, and for mono over stereo for Zoom calls, videos of talks, podcasts.

I'm about to get mathy, so ... well, anyway.

I recently put a single monitor connected to a DAC on my desktop, which doesn’t have enough room for two speakers. I had never liked Zoom calls and talks/podcasts over the hifi system in my home office. The voices sounded “fuzzy”. Also I don’t like the “voice of god” effect you get from phantom center’s wide imaging, for movies.

With mono computer audio, or center speaker for movies, voices seems more "in focus".

Of course this is my experience/preference, but I have no doubt I could tell mono from phantom center in a blind test.

I’ve been perusing ASR threads, dr. Toole’s book, and other sources. And I don’t find conclusive explanations of the differences between phantom center and real center / mono which I can hear so clearly.

In Dr Toole’s book there is a description, in chapter 7, of the problem with phantom center. The calculations show a 0.27 ms inter-aural delay from Left-Right speakers that are placed 30 degrees from center. The delay induces a destructive interference dip at around 2kHz.

But Toole explains that in normally reflective rooms, the dip is not as noticeable anyway.

I wonder about group delay though… given the cross-talk interference for phase, that should induce some cross-talk smearing for the group delay, and make it potentially bigger than the constant 0.27ms one could expect.

Now, in Toole’s book he mentions a 0.27ms inter-aural delay, and 6dB attenuation of the "second" signal. By the symmetry of the setup, 0.27ms is also the delay between the L - R speaker signals arriving at a single ear.

Doing some math now:

The phase shift created by a time delay is 2π*f*delay where f is the frequency in hertz, and the delay is measured in seconds.

This phase is measured in radians. For convenience, let’s convert to cycles.

phase(cycles) = f*delay

We don’t care about full cycles, so really,

phase(cycles) = fractional-part( f*delay )

This is a “saw” graph. In our case, delay = 0.27ms. To find the frequency at which we get to 1 cycle delay, f = 1/0.27ms = 3703 Hz. Twice the frequency of the “stereo dip”. Makes sense. The “group delay” for the second signal, if we computed "the derivative" would clearly be 0.27ms for the second signal.

Now I ask: given that the second signal is delayed by a certain phase, and is 6dB attenuated, what is the combined effect?

6dB attenuated means 1/4 the power, and 1/2 the amplitude.

So, we’re dealing with a second sinusoid arriving `phase` cycles later, with amplitude 1/2. To get fewer fractions, let’s say the first signal has amplitude 2, the second signal amplitude 1 and delay is `phase` cycles.

We need to add the two phasors. Using vector notation as (x, y) pairs:

Speaker 1: (2, 0)

Speaker 2: (cos(2π*phase), sin(2π*phase)) ... the 2π is there because phase is in cycles…

To get the phase, I add the vectors, and do `tan⁻¹` of the “y” over “x”.

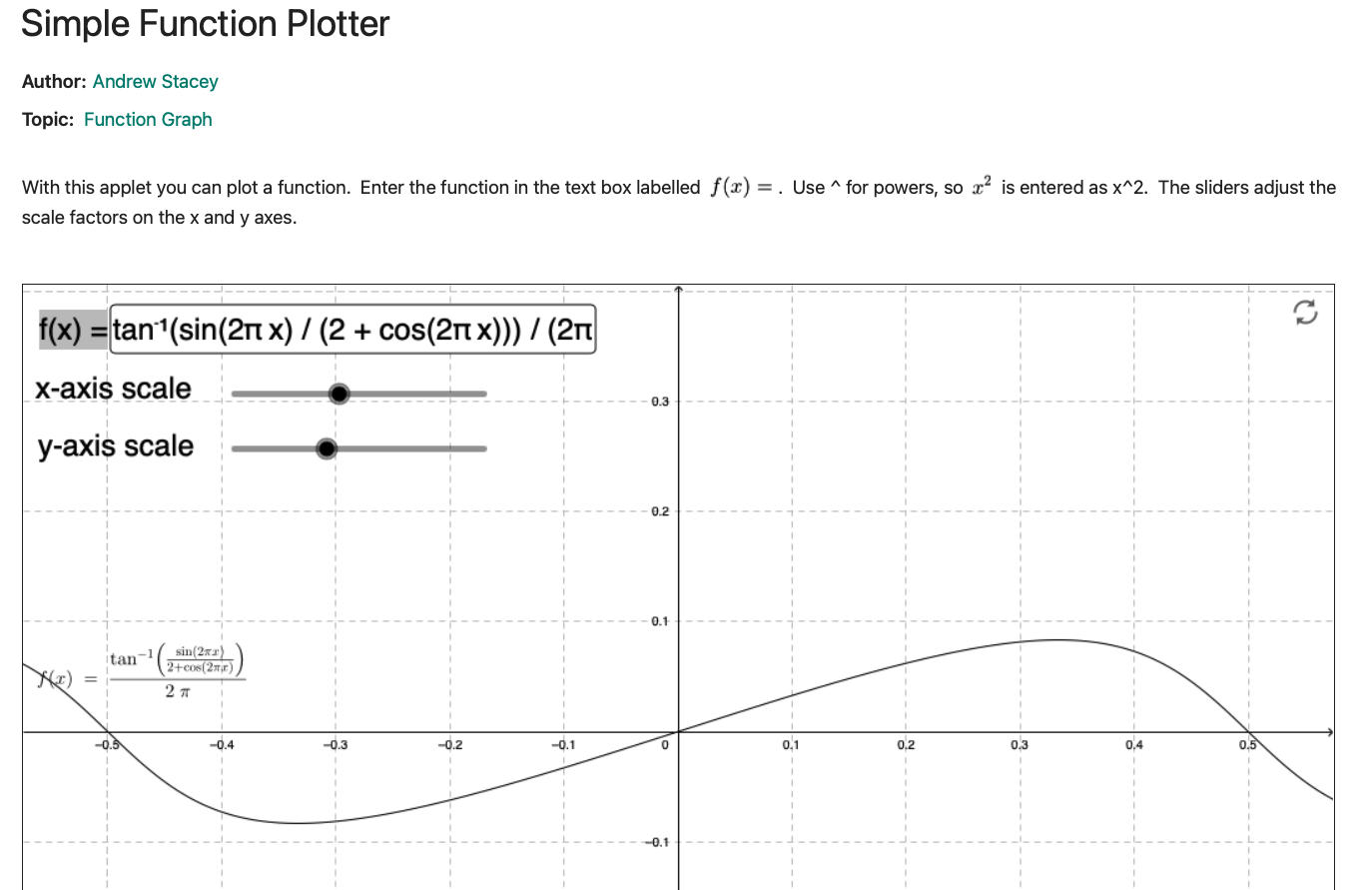

So tan⁻¹(sin(2π x) / (2 + cos(2π x))) / (2π)

with the 2π 's there to get both "input” and "output" measured in cycles.

Plotting this in geogebra (https://www.geogebra.org/m/SFacefc2)

Which, eyeballing it, seems to veer between mild positive slope and a 45 degree negative slope.

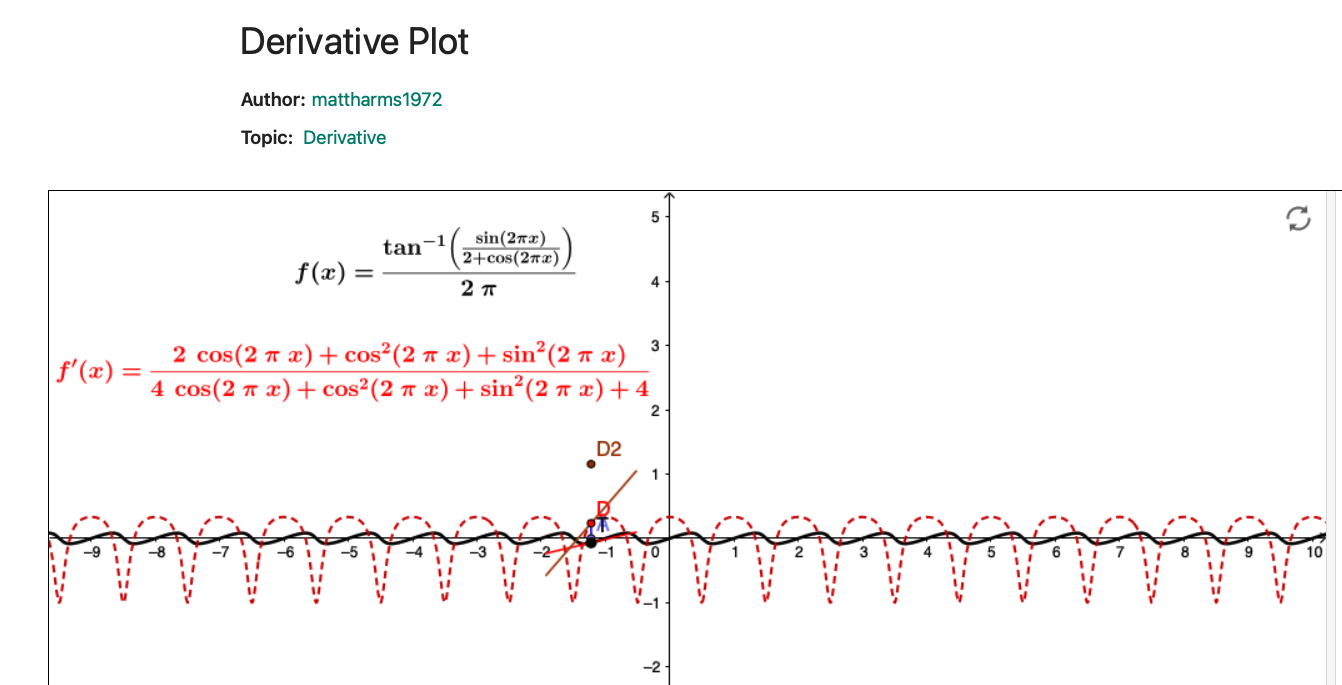

Asking geogebra (politely) to plot the derivative

I could not manage to alter the axes in this derivative plot, but we only care about the fragment between 0 and 1 cycle. The rest is repetition.

The red dashed line is the derivative, and as eyeballed, it moves between -1 and about 0.25.

So, we have up to 125% "slope difference" peak to peak.

Applying the chain rule:

group delay = [value of red curve] * 0.27ms

So, up to 1.25 * 0.27ms = 0.33ms shift in group delay.

This is below the Blauert et al. threshold for detection of group delay...

But been wondering if phase and group delay really are more important than they are given credit for.

David Griesinger (whom I discovered thanks to

audiosciencereview.com

)

audiosciencereview.com

)

will often comment that to get "realism" in vocals we need true phase-linear speakers.

And in this talk Matthew Poes comments on Griesinger and speculates that there must be something to his claims, and that phase really seems more important than thought previously.

www.youtube.com

www.youtube.com

What I'm wondering is if the systematic phase/delay smearing from stereo cross-talk effects could be the explanation for the "out-of focus" sensation of stereo speakers fed identical voice signals?

Also wondering if that could contribute to the problems with intelligibility and hearing fatigue often reported at ASR for movie dialog...

EDIT: bunch of edits in the above to clarify what I meant :/

I'm about to get mathy, so ... well, anyway.

I recently put a single monitor connected to a DAC on my desktop, which doesn’t have enough room for two speakers. I had never liked Zoom calls and talks/podcasts over the hifi system in my home office. The voices sounded “fuzzy”. Also I don’t like the “voice of god” effect you get from phantom center’s wide imaging, for movies.

With mono computer audio, or center speaker for movies, voices seems more "in focus".

Of course this is my experience/preference, but I have no doubt I could tell mono from phantom center in a blind test.

I’ve been perusing ASR threads, dr. Toole’s book, and other sources. And I don’t find conclusive explanations of the differences between phantom center and real center / mono which I can hear so clearly.

In Dr Toole’s book there is a description, in chapter 7, of the problem with phantom center. The calculations show a 0.27 ms inter-aural delay from Left-Right speakers that are placed 30 degrees from center. The delay induces a destructive interference dip at around 2kHz.

But Toole explains that in normally reflective rooms, the dip is not as noticeable anyway.

I wonder about group delay though… given the cross-talk interference for phase, that should induce some cross-talk smearing for the group delay, and make it potentially bigger than the constant 0.27ms one could expect.

Now, in Toole’s book he mentions a 0.27ms inter-aural delay, and 6dB attenuation of the "second" signal. By the symmetry of the setup, 0.27ms is also the delay between the L - R speaker signals arriving at a single ear.

Doing some math now:

The phase shift created by a time delay is 2π*f*delay where f is the frequency in hertz, and the delay is measured in seconds.

This phase is measured in radians. For convenience, let’s convert to cycles.

phase(cycles) = f*delay

We don’t care about full cycles, so really,

phase(cycles) = fractional-part( f*delay )

This is a “saw” graph. In our case, delay = 0.27ms. To find the frequency at which we get to 1 cycle delay, f = 1/0.27ms = 3703 Hz. Twice the frequency of the “stereo dip”. Makes sense. The “group delay” for the second signal, if we computed "the derivative" would clearly be 0.27ms for the second signal.

Now I ask: given that the second signal is delayed by a certain phase, and is 6dB attenuated, what is the combined effect?

6dB attenuated means 1/4 the power, and 1/2 the amplitude.

So, we’re dealing with a second sinusoid arriving `phase` cycles later, with amplitude 1/2. To get fewer fractions, let’s say the first signal has amplitude 2, the second signal amplitude 1 and delay is `phase` cycles.

We need to add the two phasors. Using vector notation as (x, y) pairs:

Speaker 1: (2, 0)

Speaker 2: (cos(2π*phase), sin(2π*phase)) ... the 2π is there because phase is in cycles…

To get the phase, I add the vectors, and do `tan⁻¹` of the “y” over “x”.

So tan⁻¹(sin(2π x) / (2 + cos(2π x))) / (2π)

with the 2π 's there to get both "input” and "output" measured in cycles.

Plotting this in geogebra (https://www.geogebra.org/m/SFacefc2)

Which, eyeballing it, seems to veer between mild positive slope and a 45 degree negative slope.

Asking geogebra (politely) to plot the derivative

I could not manage to alter the axes in this derivative plot, but we only care about the fragment between 0 and 1 cycle. The rest is repetition.

The red dashed line is the derivative, and as eyeballed, it moves between -1 and about 0.25.

So, we have up to 125% "slope difference" peak to peak.

Applying the chain rule:

group delay = [value of red curve] * 0.27ms

So, up to 1.25 * 0.27ms = 0.33ms shift in group delay.

This is below the Blauert et al. threshold for detection of group delay...

But been wondering if phase and group delay really are more important than they are given credit for.

David Griesinger (whom I discovered thanks to

Science on low frequency quality?

I've been consuming a lot of information on low frequency reproduction, room modes, correction via DSP, brand wars etc. Something keeps bugging me. For all the effort expended on LF, I wonder if the science really backs it up. I have the first edition of Floyd Toole's book. Reading that, and...

audiosciencereview.com

audiosciencereview.com

will often comment that to get "realism" in vocals we need true phase-linear speakers.

And in this talk Matthew Poes comments on Griesinger and speculates that there must be something to his claims, and that phase really seems more important than thought previously.

Understanding & Improving Speech Intelligibility for Home Theater

This livestream presentation focuses on the latest research in understanding speech intelligibility. We discuss how to improve this in your home theater for ...

What I'm wondering is if the systematic phase/delay smearing from stereo cross-talk effects could be the explanation for the "out-of focus" sensation of stereo speakers fed identical voice signals?

Also wondering if that could contribute to the problems with intelligibility and hearing fatigue often reported at ASR for movie dialog...

EDIT: bunch of edits in the above to clarify what I meant :/

Last edited: