This is a reprint of an article written ca. 2011-2012. I've been meaning to rewrite and update it, but Life and Work keeps getting in the way, so I am reposting the original as-is. Take it for what it's worth, an introductory take on one of the potential causes for differences in sound among amplifiers driving different speakers.

There are two parts: an original, and a follow-on post with parameters tweaked to match some additional "real-world" amplifiers made after some readers questioned some of the results. The readers' comments are not mine to share but hopefully you won't miss them.

FYI - Don

Part 1

Showing the impact of speaker loading on amplifiers is not too hard but is tedious (at least it was for me!) I set up two amps and two speakers, then plotted the speaker impedance and frequency response at the amplifier terminals. The speakers were 8-ohm nominal, 3-way, with crossovers at 300 Hz and 3 kHz. The first amp is SS with 0.1 ohm output (damping factor of 80) rising to about 1 ohm (DF=12.5) at 20 kHz. This mimics the way a lot of amplifiers behave with rising output impedance with frequency. The second amp is a tube amp with 0.8-ohm output (DF=10) rising to about 4 ohms at 20 kHz (DF=2; this is about as bad as I have measured in the primordial past).

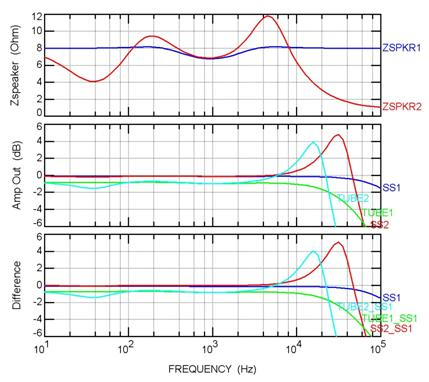

The top plot shows the impedance, the next the amplifier outputs, and in the bottom plot I repeated the first amplifier’s response and then showed differences from each other amplitude to that first (SS) amp.

Speaker 1 shows pretty benign magnitude, with a slight dip where the midrange driver comes into play. The dip is because I used very simple first-order crossovers so there is some interaction among drivers. For the second speaker, I started with the network from the first, then added a roll-off at the high end to simulate a ribbon-type tweeter and a resonance dip to 4 ohms in the bass. This led to some peaks as well, though nothing terribly extreme.

Looking at the plots in the middle we can see how the amplifier’s impedances influence the response. A perfect amp would show a straight line. The SS amp into Speaker 1 (SS1, blue) shows very flat response with a bit of HF roll-off as expected. The tube amp into the first speaker (TUBE1, green) has a little less amplitude due to the higher impedance (this is insignificant since you’d just turn up the volume a little) and the HF roll-off kicks in sooner (again, expected for a tube amp). The plots into the second speaker are more interesting, with both amps showing some peaking at HF. This is because the rising output impedance is interacting with the capacitive load and causing a peak. Yes, this can happen in real life; it is one reason some amps are not stable with highly-capacitive loads. The peak is larger and at higher frequency with the SS amp (SS2, red) compared to the tube amp (TUBE2, light blue). This is because of the tube amps higher output impedance and faster HF roll-off. Note the tube amp into speaker 2 also has a LF dip, demonstrating the trouble it has driving low-impedance loads even at LF.

The final plot shows the SS amp into speaker 1, SS1 (blue), and the difference between that response and the other outputs. This is what we might hear when comparing amplifiers. Into the second speaker, the SS amp (red) is almost 3 dB higher at 20 kHz, peaking at ~5 dB at ~25 kHz, then rolling off. There is only about 1 dB difference at 10 kHz, rapidly decreasing to zero (a perfect match) below that. The tube amp into speaker 1 rolls off a little sooner, but is only about 1 dB down at 20 kHz, a difference we are unlikely to hear. Into the second speaker, the tube amp’s HF peak is about 4 dB at 15 kHz, dropping on either side. There is also that dip of ~1 dB at 30 Hz.

Can you hear this? I don’t know, but in a careful side-by-side test you might. Real amplifier and speaker impedances are more complicated and so might fare better or worse in a test. However, at least it shows that bad things can happen to good amps when presented with the real world of speakers.

HTH - Don

Part 2

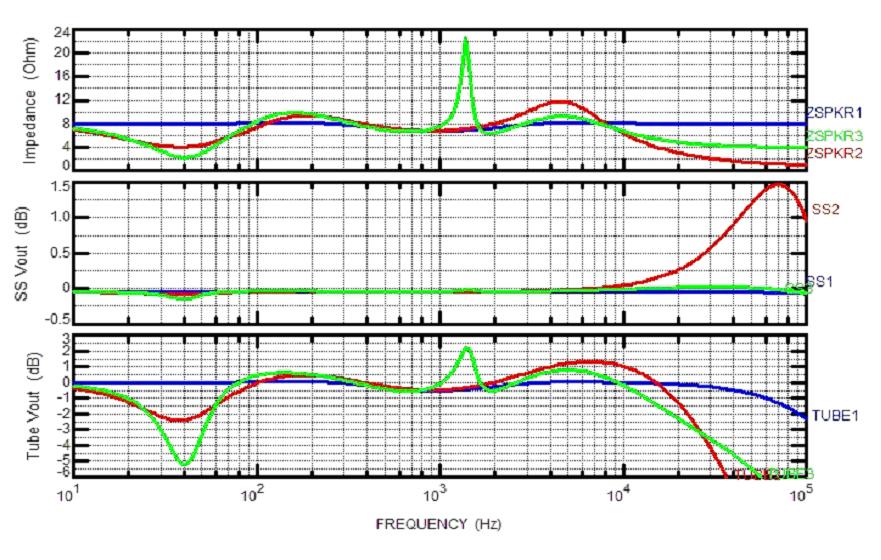

Here are a new set of plots to address some of the comments made earlier. The SS amp now has a LF damping factor (DF) of 200 (0.04 ohm output) dropping to 50 (0.16 ohms) at 20 kHz. The tube amp went the other way, with an output impedance of 4 ohms (DF = 2) rising to 6 ohms (DF = 1.33) at 20 kHz.

There are no changes to speaker 1, still a pretty tame 8-ohm load, or speaker 2, dipping to 4 ohms at 40 Hz and ~3 ohms at 20 kHz (with a few other peaks and valleys). I added another speaker (3) that dips all the way to 2 ohms at 40 Hz, a milder HF load (~5 ohms at 20 kHz), but adds a high-Q peak around 1.5 kHz that raises the impedance to about 22 ohms.

Now the SS amp performs pretty well into every speaker, though the low HF impedance of speaker 2 still reacts with the output inductance to peak 1.5 dB way up at 70 kHz (only ~0.25 dB at 20 kHz). The lower LF impedance dip of speaker 3 causes a very small dip, about 0.1 dB.

The tube amp struggles due to the much higher output impedance of the new model. It is OK with speaker 1 (have I mentioned how much I like my Magnepans?) but struggles with speaker 1 and 2.

This repeats work I did long ago, and no doubt done by many, many others. It helps explains my position on amps, which is that for most speakers I find it easy to distinguish a SS from a tube amp, find it almost impossible to pick from two SS amps at reasonable levels, and find it much harder to pick between two tube amps (though IME tube amps tend to vary much more than SS amps). This also helps explain why some people prefer certain amp/speaker pairings.

HTH - Don

There are two parts: an original, and a follow-on post with parameters tweaked to match some additional "real-world" amplifiers made after some readers questioned some of the results. The readers' comments are not mine to share but hopefully you won't miss them.

FYI - Don

Part 1

Showing the impact of speaker loading on amplifiers is not too hard but is tedious (at least it was for me!) I set up two amps and two speakers, then plotted the speaker impedance and frequency response at the amplifier terminals. The speakers were 8-ohm nominal, 3-way, with crossovers at 300 Hz and 3 kHz. The first amp is SS with 0.1 ohm output (damping factor of 80) rising to about 1 ohm (DF=12.5) at 20 kHz. This mimics the way a lot of amplifiers behave with rising output impedance with frequency. The second amp is a tube amp with 0.8-ohm output (DF=10) rising to about 4 ohms at 20 kHz (DF=2; this is about as bad as I have measured in the primordial past).

The top plot shows the impedance, the next the amplifier outputs, and in the bottom plot I repeated the first amplifier’s response and then showed differences from each other amplitude to that first (SS) amp.

Speaker 1 shows pretty benign magnitude, with a slight dip where the midrange driver comes into play. The dip is because I used very simple first-order crossovers so there is some interaction among drivers. For the second speaker, I started with the network from the first, then added a roll-off at the high end to simulate a ribbon-type tweeter and a resonance dip to 4 ohms in the bass. This led to some peaks as well, though nothing terribly extreme.

Looking at the plots in the middle we can see how the amplifier’s impedances influence the response. A perfect amp would show a straight line. The SS amp into Speaker 1 (SS1, blue) shows very flat response with a bit of HF roll-off as expected. The tube amp into the first speaker (TUBE1, green) has a little less amplitude due to the higher impedance (this is insignificant since you’d just turn up the volume a little) and the HF roll-off kicks in sooner (again, expected for a tube amp). The plots into the second speaker are more interesting, with both amps showing some peaking at HF. This is because the rising output impedance is interacting with the capacitive load and causing a peak. Yes, this can happen in real life; it is one reason some amps are not stable with highly-capacitive loads. The peak is larger and at higher frequency with the SS amp (SS2, red) compared to the tube amp (TUBE2, light blue). This is because of the tube amps higher output impedance and faster HF roll-off. Note the tube amp into speaker 2 also has a LF dip, demonstrating the trouble it has driving low-impedance loads even at LF.

The final plot shows the SS amp into speaker 1, SS1 (blue), and the difference between that response and the other outputs. This is what we might hear when comparing amplifiers. Into the second speaker, the SS amp (red) is almost 3 dB higher at 20 kHz, peaking at ~5 dB at ~25 kHz, then rolling off. There is only about 1 dB difference at 10 kHz, rapidly decreasing to zero (a perfect match) below that. The tube amp into speaker 1 rolls off a little sooner, but is only about 1 dB down at 20 kHz, a difference we are unlikely to hear. Into the second speaker, the tube amp’s HF peak is about 4 dB at 15 kHz, dropping on either side. There is also that dip of ~1 dB at 30 Hz.

Can you hear this? I don’t know, but in a careful side-by-side test you might. Real amplifier and speaker impedances are more complicated and so might fare better or worse in a test. However, at least it shows that bad things can happen to good amps when presented with the real world of speakers.

HTH - Don

Part 2

Here are a new set of plots to address some of the comments made earlier. The SS amp now has a LF damping factor (DF) of 200 (0.04 ohm output) dropping to 50 (0.16 ohms) at 20 kHz. The tube amp went the other way, with an output impedance of 4 ohms (DF = 2) rising to 6 ohms (DF = 1.33) at 20 kHz.

There are no changes to speaker 1, still a pretty tame 8-ohm load, or speaker 2, dipping to 4 ohms at 40 Hz and ~3 ohms at 20 kHz (with a few other peaks and valleys). I added another speaker (3) that dips all the way to 2 ohms at 40 Hz, a milder HF load (~5 ohms at 20 kHz), but adds a high-Q peak around 1.5 kHz that raises the impedance to about 22 ohms.

Now the SS amp performs pretty well into every speaker, though the low HF impedance of speaker 2 still reacts with the output inductance to peak 1.5 dB way up at 70 kHz (only ~0.25 dB at 20 kHz). The lower LF impedance dip of speaker 3 causes a very small dip, about 0.1 dB.

The tube amp struggles due to the much higher output impedance of the new model. It is OK with speaker 1 (have I mentioned how much I like my Magnepans?) but struggles with speaker 1 and 2.

This repeats work I did long ago, and no doubt done by many, many others. It helps explains my position on amps, which is that for most speakers I find it easy to distinguish a SS from a tube amp, find it almost impossible to pick from two SS amps at reasonable levels, and find it much harder to pick between two tube amps (though IME tube amps tend to vary much more than SS amps). This also helps explain why some people prefer certain amp/speaker pairings.

HTH - Don