I've seen it. I understand that you can recreate the analog signal of a sine wave with 2 samples perfectly.Your questions suggest the opposite of familiarity. Please don't make me drag out the link to Monty's video

-

WANTED: Happy members who like to discuss audio and other topics related to our interest. Desire to learn and share knowledge of science required. There are many reviews of audio hardware and expert members to help answer your questions. Click here to have your audio equipment measured for free!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

High-res audio comparison: Linn Records Free High Res Samples

- Thread starter amirm

- Start date

The easy answer is that you want the same data as they used in the DAW. But many DAWs use 32 bit floating point internally. So this isn't actually viable. Most DAWs will run at 96kHz. However: The optimal bandwidth is clearly the one which conveys all useful information to the listener and avoids cluttering the product with redundant or harmful crud. So target 20kHz bandwidth.Is their an optimal bandwidth to target in the final master that we should be seeking to purchase from media producers? Such that we are getting a simple copy of the final master and avoiding the conversion step to CD/Redbook spec?

But why avoid the conversion? Seriously. Why even bother to ask the question?

I've seen it. I understand that you can recreate the analog signal of a sine wave with 2 samples perfectly.

There is a great deal more to it than that. Indeed if that is the takeaway it isn't helping.

If you sample a defined bandwidth signal at a rate equivalent to twice the bandwidth you can exactly capture the entire content of the signal. It isn't about sine waves, it is about the entire content.

Add Shannon on information and you have this:

If you have a bandwidth limited channel with a defined signal to noise you can capture the entire content of the channel if you sample at twice the bandwidth and with S/N / 6.02 bits.

Which is why a CD gets you 22kHz of bandwidth and 96dB of S/N. Every last vestige of information in the defined channel is captured and will be reproduced again. There are a lot of subtleties in what is going on. And it seems counter-intuitive. But it solid.

Thanks. That's helpful.There is a great deal more to it than that. Indeed if that is the takeaway it isn't helping.

If you sample a defined bandwidth signal at a rate equivalent to twice the bandwidth you can exactly capture the entire content of the signal. It isn't about sine waves, it is about the entire content.

Add Shannon on information and you have this:

If you have a bandwidth limited channel with a defined signal to noise you can capture the entire content of the channel if you sample at twice the bandwidth and with S/N / 6.02 bits.

Which is why a CD gets you 22kHz of bandwidth and 96dB of S/N. Every last vestige of information in the defined channel is captured and will be reproduced again. There are a lot of subtleties in what is going on. And it seems counter-intuitive. But it solid.

A couple more questions then to get more into the practical application.

How is the signal to noise defined in practice?

I see in Amirs DAC measurements the high pass filter slope is inconsistently implemented and the ultimate attention level is also inconsistent. How does this influence the choice of sample rate?

Lastly, Amirs videos show.music correlated information on the ultrasonic range. Does that information along with the variable implementation of DAC filters have any bearing on the final analog signal output?

- Joined

- Oct 11, 2019

- Messages

- 1,838

- Likes

- 2,753

In an ideal world, the Shannon-Nyquist theorem would operate perfectly, and sound waves being a sinuisoidal function would be regenerated with no loss of information when sampled at a frequency equal to twice the largest sound wave we would want to capture. However, due to quantization error, the sample rate should be set marginally higher. Therefore, 44.1kHz was chosen as the redbook standard to provide this margin as well as to be compatible with NTSC videotape recording rates which were a popular digital recording medium at the time. I am unsure why 48kHz later became the standard for DAT, though.44.1, 96 kHz does describe the sampling rate. The theoretical maximum bandwidth is 1/2 of the sample rate.

- Joined

- Oct 11, 2019

- Messages

- 1,838

- Likes

- 2,753

They are two sides of the same coin. Sample rate is measured in Hz, 1/second, which intuitively makes sense because the sample rate is the number of equally spaced signal readings made each second. “Frequency” in this context means how frequent a reading is taken in any given second. Perfectly logical, right?So it describes both the sample rate and the bandwidth recorded?

Here’s the part that might make less sense—and my explanation is an over simplification. What we call “sound” is our brain’s interpretation of the pressure of vibration of air. Each sound is associated with a specific pressure over time (among other measurements that can also be made such as amplitude). This is also measured in Hz, 1/second, representing how many vibrations per second occur in a given sound frequency. As explained earlier, each frequency of sound in Hz can be captured by a sampling rate twice as large in Hz.

Good questions.

In reality it is a bit more messy. Noise isn't constant with frequency as noise comes from many sources. So you may want to measure at multiple points across your band, and work from there. Shannon doesn't need a single noise figure, it takes into account the noise at each frequency. Single figures of merit, like say A-weighting give you a noise figure moderated by the ear's response across the spectrum.

In the simplest form, just measure the energy in your channel when there is no signal.How is the signal to noise defined in practice?

In reality it is a bit more messy. Noise isn't constant with frequency as noise comes from many sources. So you may want to measure at multiple points across your band, and work from there. Shannon doesn't need a single noise figure, it takes into account the noise at each frequency. Single figures of merit, like say A-weighting give you a noise figure moderated by the ear's response across the spectrum.

The nominal location of the filter's cut-off is defined from the sample rate. It should reach cut-off before you reach the Nyquist limit. Amir calls out filters that are not reaching this requirement. The problem here is that if they don't cut off, there can be leakage of signal above the cut-off frequency into the pass band, which manifests itself as an alias, which is energy at a frequency in the pass band at a different frequency to where is really was. In principle this can sound bad. There is a tension between hard cut-off and in-band effects of the filters. Overall however there is no excuse for choosing a slow cut-off.I see in Amirs DAC measurements the high pass filter slope is inconsistently implemented and the ultimate attention level is also inconsistent. How does this influence the choice of sample rate?

Yes. Energy up in the ultrasonics are what form the alias products. If not properly filtered out they manifest themselves in the audible band with frequencies equal to things like the sample rate minus their initial frequency. In general, even with the softer filters sometimes seen you will be hard pressed to actually hear anything, but the mathematics is clear on the reality.Lastly, Amirs videos show.music correlated information on the ultrasonic range. Does that information along with the variable implementation of DAC filters have any bearing on the final analog signal output?

- Joined

- Feb 23, 2016

- Messages

- 20,690

- Likes

- 37,414

48 khz was also due to video via a different path.In an ideal world, the Shannon-Nyquist theorem would operate perfectly, and sound waves being a sinuisoidal function would be regenerated with no loss of information when sampled at a frequency equal to twice the largest sound wave we would want to capture. However, due to quantization error, the sample rate should be set marginally higher. Therefore, 44.1kHz was chosen as the redbook standard to provide this margin as well as to be compatible with NTSC videotape recording rates which were a popular digital recording medium at the time. I am unsure why 48kHz later became the standard for DAT, though.

https://www.cardinalpeak.com/blog/why-do-cds-use-a-sampling-rate-of-44-1-khz

This explains the 44.1 khz.

Two reasons have been given for 48kHz. One is that is gives the recording engineer a bit of wiggle room to pitch correct. The other is that digital landlines used to reticulate audio the FM radio stations used 32kHz (which meets the FM transmission's 15kHz bandwidth.) Prior to the advent of the mathematics and affordable hardware of arbitrary sample rate converters conversion of sample rates was mostly limited to easy ratios. So 3:2 is easy. So 48kHz was easy to convert to 32kHz. So it was already a professional standard when DAT came along.

Generally however, if they can, any engineer is going to pick a power or half power of two just from their inherent OCD. I would.

There is a persistent conspiracy theory that 48kHz was chosen for DAT to prevent easy copying of CDs.

CD is really an anomaly, and the above cite provides the awful reasons.

Generally however, if they can, any engineer is going to pick a power or half power of two just from their inherent OCD. I would.

There is a persistent conspiracy theory that 48kHz was chosen for DAT to prevent easy copying of CDs.

CD is really an anomaly, and the above cite provides the awful reasons.

respice finem

Major Contributor

- Joined

- Feb 1, 2021

- Messages

- 1,867

- Likes

- 3,777

Oversampling (twice the desired bandwidth) is beneficial though, "prior Art" by the inventors of the CD standard: https://en.wikipedia.org/wiki/Oversampling....Why even sample above 25+30khz? It seems like it just adds noise and data bloat to the file and doesn't give any benefit.

/edit: Now I see it's already clarified in previous posts.

An anatomical/physiological aspect apart from maths and sophisticated theories: The "final" analog signal reaching our brain is not identical to what we see on the oscilloscope. It must first pass the amp(s), the speakers, from one to several metres of air, and finally, anything left of ultrasound in it will meet this: https://i.pinimg.com/originals/bd/b4/59/bdb459739c272f33be96cd5b36e879b4.jpg which will "anihillate" any remaining ultrasonic information that may have still been there. We simply aren't "built" to hear ultrasound. Children may hear a bit above 20 kHz at sane levels, but not at levels dozens of dB below the average music signal. Masking effect, the same way as you will not hear a mosquito in a rock concert. https://en.wikipedia.org/wiki/Auditory_masking An adult is very unlikely to hear 20kHz anyway. So, effectively, we are splitting hairs I'm afraid.

Last edited:

Ok, you do say that doubling of sampling rate is enough to capture "sufficient" data at a given analog signal frequency.

I know that 1 Hz has nothing to do with music but lets use it as an example to simplify mathematically and visually. So we have 1 full pure sine wave with 1 Hz, and we are sampling it 2 times per second. This means we're getting maximum 2 peak points of the signal which is very far from from representing an original waveform. Thus if we'd used say 20 Hz samplings rate, it would give us more clear shape of a sine wave. And the same should apply to the higher frequencies... Am I wrong here?

Or maybe all these does not matter because we finally reach the speakers stage for reproducing the sound waves, which by itself have mechanical limitations (precise cone movement over the voice coil) and thus all shortcuts done in digital processing are not noticeable??

Sorry for my English

I know that 1 Hz has nothing to do with music but lets use it as an example to simplify mathematically and visually. So we have 1 full pure sine wave with 1 Hz, and we are sampling it 2 times per second. This means we're getting maximum 2 peak points of the signal which is very far from from representing an original waveform. Thus if we'd used say 20 Hz samplings rate, it would give us more clear shape of a sine wave. And the same should apply to the higher frequencies... Am I wrong here?

Or maybe all these does not matter because we finally reach the speakers stage for reproducing the sound waves, which by itself have mechanical limitations (precise cone movement over the voice coil) and thus all shortcuts done in digital processing are not noticeable??

Sorry for my English

- Joined

- Feb 23, 2016

- Messages

- 20,690

- Likes

- 37,414

You are wrong. Two sample points would reconstruct the sinewave as well as 20 sample points would. (please I know actually you need ever so slightly more than two sample points. But close enough for our purposes here).Ok, you do say that doubling of sampling rate is enough to capture "sufficient" data at a given analog signal frequency.

I know that 1 Hz has nothing to do with music but lets use it as an example to simplify mathematically and visually. So we have 1 full pure sine wave with 1 Hz, and we are sampling it 2 times per second. This means we're getting maximum 2 peak points of the signal which is very far from from representing an original waveform. Thus if we'd used say 20 Hz samplings rate, it would give us more clear shape of a sine wave. And the same should apply to the higher frequencies... Am I wrong here?

Or maybe all these does not matter because we finally reach the speakers stage for reproducing the sound waves, which by itself have mechanical limitations (precise cone movement over the voice coil) and thus all shortcuts done in digital processing are not noticeable??

Sorry for my English

I know it seems so very non-intuitive, but it is so. Any given set of sample points reconstruct only one waveform which will pass thru all those points.

Your thinking, which on its face seems obvious, more samples equals more accurate sampling, is what confounds so many, and it is why higher sample rates are not of higher resolution, but only for higher bandwidth.

Go watch THE video from Monty. Watch it over and over, keep going until you understand each point along the way. Things will make sense then.

restorer-john

Grand Contributor

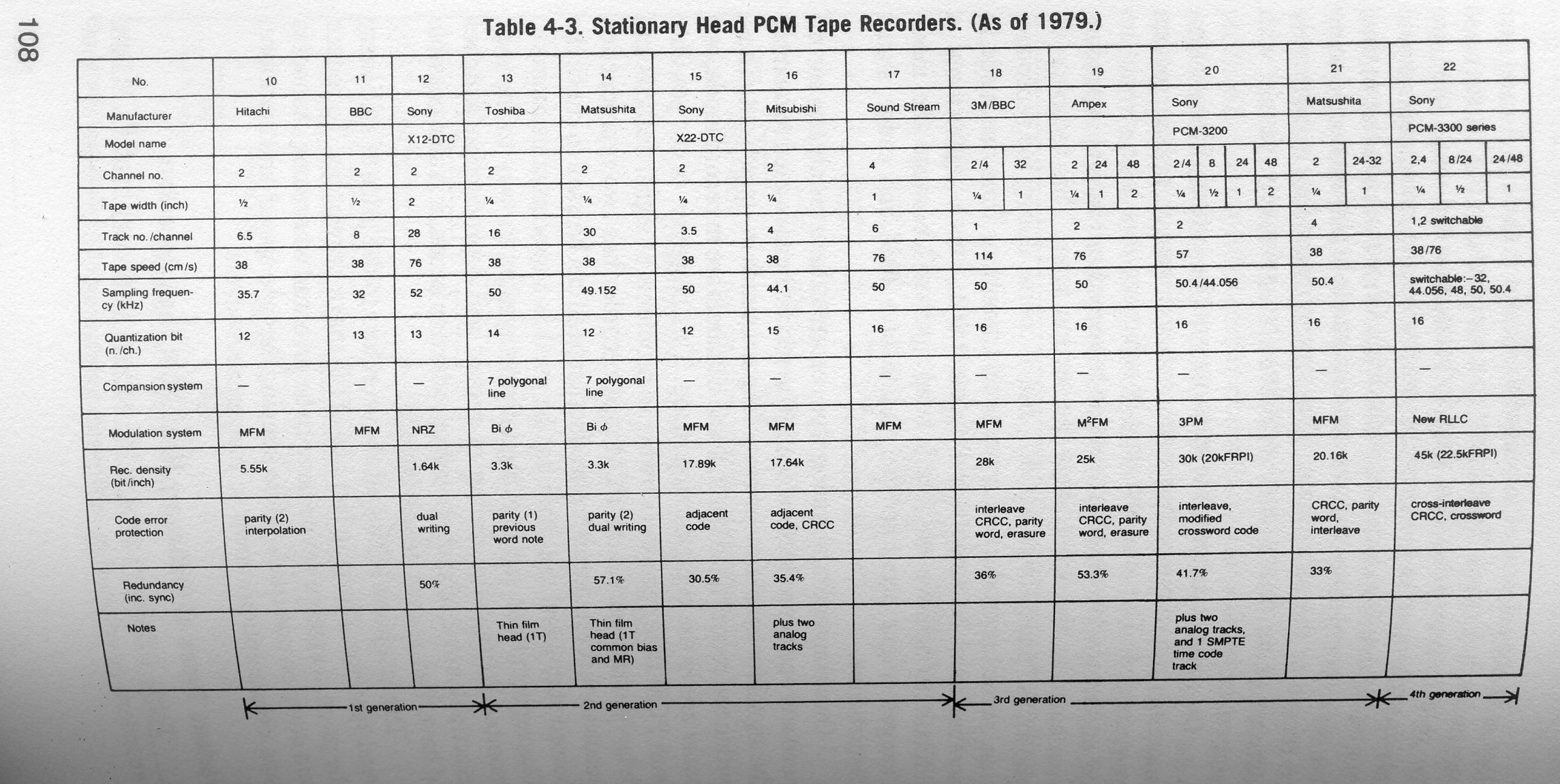

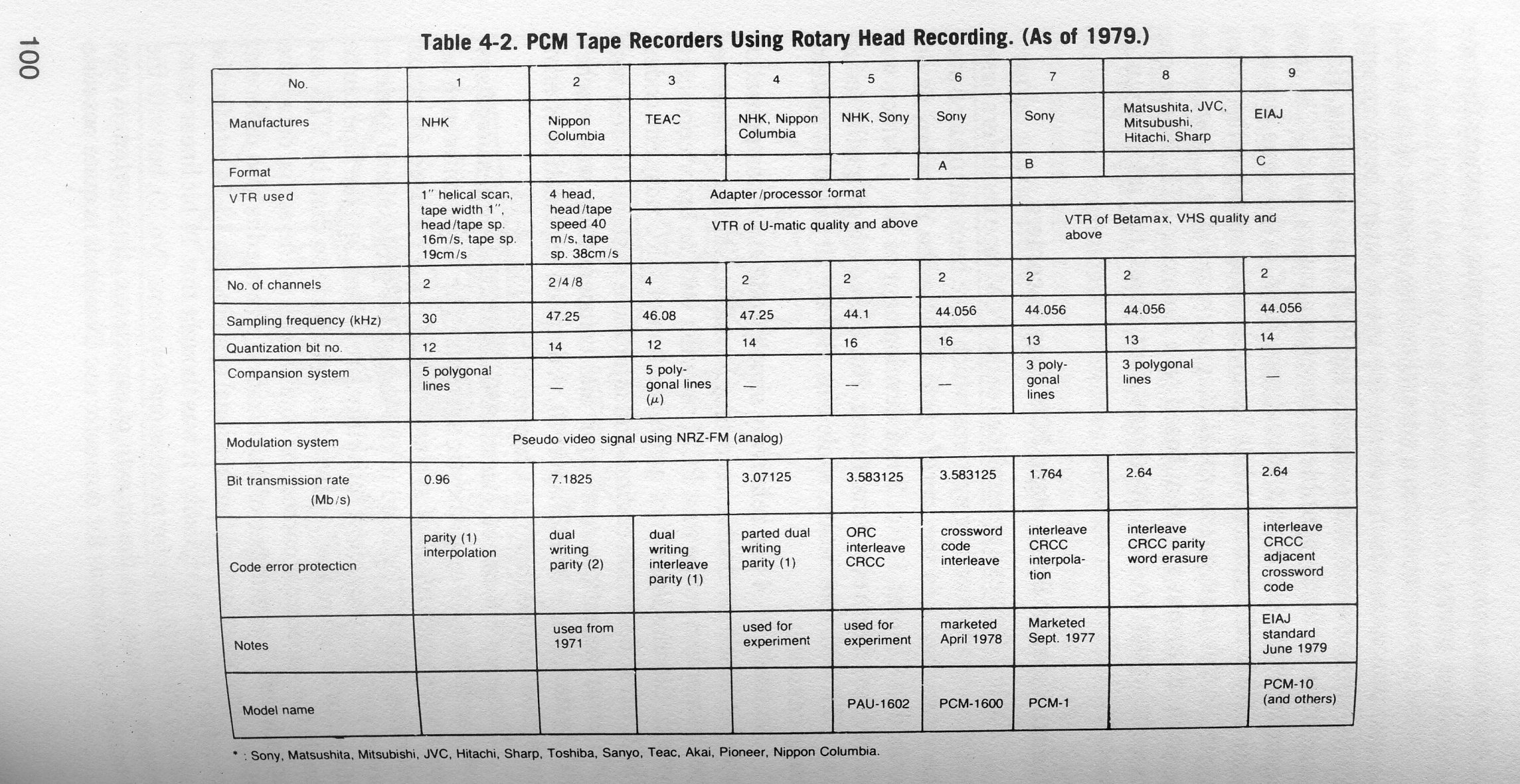

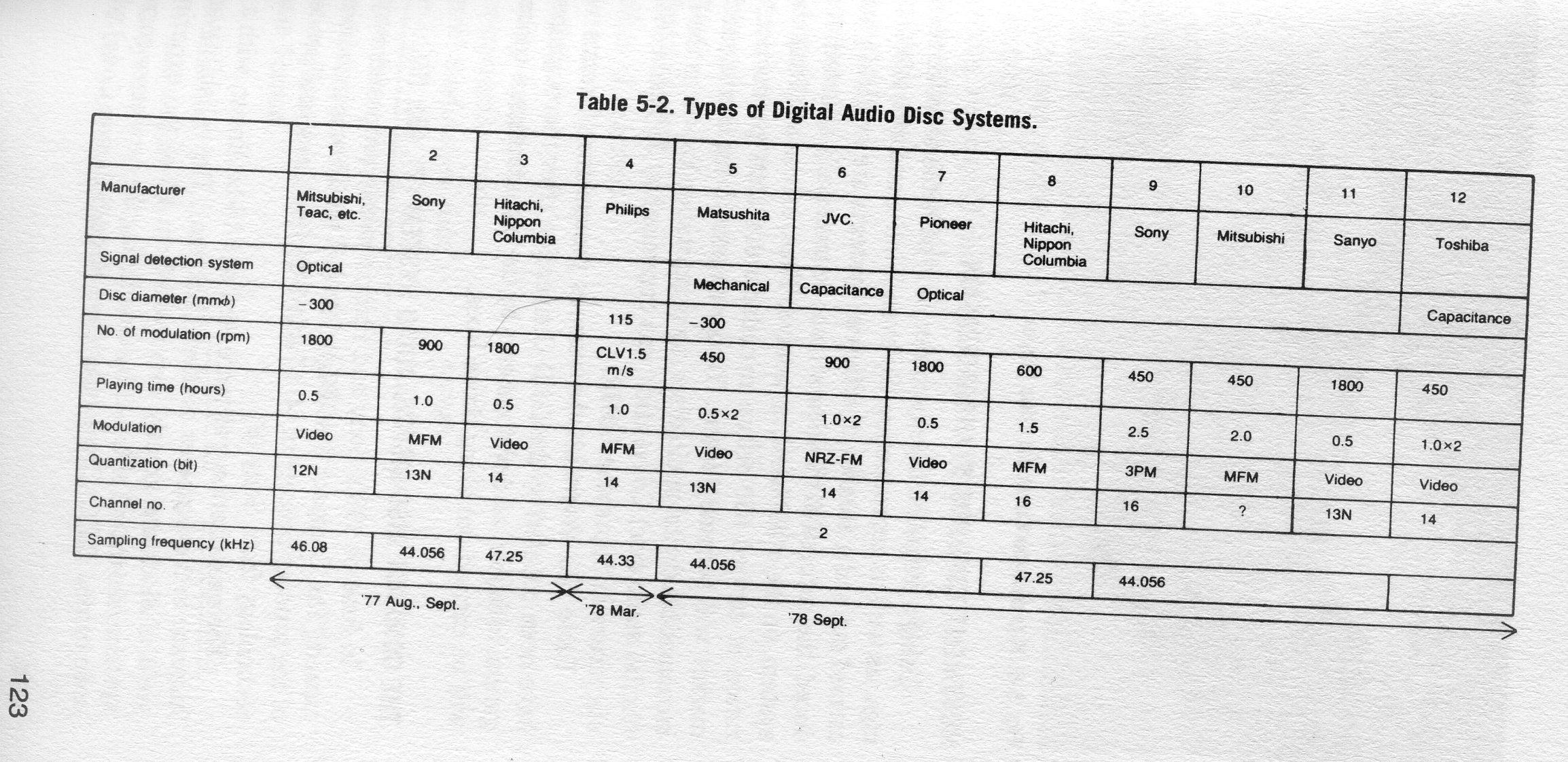

Here's some actual facts on the history of the sampling rates, the players and the timelines in lead up to CD, for anyone interested. From Digital Audio Technology (Nakajima, Doi*, Fukuda and Iga).

@amirm 's old boss at Sony.

the "N" next to quantization numbers means non-linear.

The actual sample rate on the NTSC based (umatics) used in early mastering for CD was not 44.1kHz, it was 44.056kHz.

The standardization for domestic adaptors at the time for VCRs was 44.056kHz and 14 bits, 2.643Mb/s.

@amirm 's old boss at Sony.

the "N" next to quantization numbers means non-linear.

The actual sample rate on the NTSC based (umatics) used in early mastering for CD was not 44.1kHz, it was 44.056kHz.

The standardization for domestic adaptors at the time for VCRs was 44.056kHz and 14 bits, 2.643Mb/s.

respice finem

Major Contributor

- Joined

- Feb 1, 2021

- Messages

- 1,867

- Likes

- 3,777

Wow, started in 1977, I was 10 years old then. Shows how much "ahead of the pack" this early digital tech was. In 1977, analog tape reigned supreme...

Or maybe all these does not matter because we finally reach the speakers stage for reproducing the sound waves, which by itself have mechanical limitations (precise cone movement over the voice coil) and thus all shortcuts done in digital processing are not noticeable??

Sorry for my English

You actually almost have it. But what you see in the digital processing steps are not shortcuts. From a deep mathematical perspective they are precise and lose no information. This is really important.

The critical point is that in your analysis there is no difference between the reconstruction filter in the DAC and the mechanical filter presented by the tweeter. If you had a tweeter that cut off at 22kHz, you could, in principle, avoid using a reconstruction filter in the DAC. However that would require that your amplifier was fully capable of handling the raw DAC output. Which is a very tall ask. The reality is the tweeters don't have such perfect cut-offs, but the principle is sound as a thought experiment.

This underlies the critical point that is often unappreciated. The reconstruction filter forms a non-optional part of the chain. It must be present in exactly the same manner as the anti-aliasing filter must be present on the input to the ADC. In fact a DAC and ADC are precisely symmetrical. The reconstruction filter acts to limit the bandwidth of the output to be that available by the encoded channel, and in exactly the same manner the anti-aliasing filter limits the bandwidth of the sampled channel before encoding. Once the bandwidth is so limited there are no gaps between the samples. Your sampled sine wave, no matter what the frequency, so long as it falls within the bandwidth limits will be exactly reproduced. It will get exactly the number of samples over its period as are needed to perfectly reconstruct it within the limits of the S/N of the channel.

Now there is a very interesting subtlety here. Say I have a channel that goes from 100kHz to 120kHz, Shannon/Nyquist says I can encode that with a 40kHz sample rate. Really? Seriously? Yes. If you decode it with the reconstruction filter set to limit between 100kHz and 120kHz, you will get your original signal back. What we call the ant-alias and reconstruction filters on audio gear are actually a bandwidth limiting filters, it is just that since we are starting our channel's band at zero we don't notice the implicit high pass part of the filter.

What if I have encoded a channel of a given bandwidth, and I decode it into another channel of the same bandwidth but different start and finishing frequencies? Say I encode 20kHz to 40kHz with a 40kHz clock and decode it with a 0-20kHz filter? Well yes, you get signal. The entire band will have been frequency shifted down. And you can do this a near arbitrary frequencies. This forms the basis of what has become known as software defined radios. So long as you bandwidth limit correctly you can sample any channel at the Nyquist rate, and encode all the information.

This is why you need to ensure your reconstruction filter is not leaky, because if it is you are breaking the symmetry of the channels, and stuff starts to leak in in a manner that is not actually valid.

But to reiterate the core point. Shannon tells us exactly what the requirements are, and despite intuition to the contrary, there is nothing that any human can detect that requires higher than the Nyquist sample rate for a 20kHz wide channel to fully capture. Nothing. There are no gaps between the samples and the samples taken exactly charaterise the signal within the channel in a maner that allows exaclt reconstruction within the limits of the defined channel encoding.

restorer-john

Grand Contributor

Wow, started in 1977, I was 10 years old then. Shows how much "ahead of the pack" this early digital tech was. In 1977, analog tape reigned supreme...

Nippon Columbia's machine was 1971...

Thanks, a really good educating video. I realized a lot. Now I need to dig into a bandwidth limited signal term, didn't fully understand...You are wrong. Two sample points would reconstruct the sinewave as well as 20 sample points would. (please I know actually you need ever so slightly more than two sample points. But close enough for our purposes here).

I know it seems so very non-intuitive, but it is so. Any given set of sample points reconstruct only one waveform which will pass thru all those points.

Your thinking, which on its face seems obvious, more samples equals more accurate sampling, is what confounds so many, and it is why higher sample rates are not of higher resolution, but only for higher bandwidth.

Go watch THE video from Monty. Watch it over and over, keep going until you understand each point along the way. Things will make sense then.

Regarding quantization, this process "joins" the sampled points of signal amplitudes, smoothes them to reconstruct analog wave, but it produces a noise (difference between an input value and its quantized value, or quantization error). Now here comes a logical question, if we use more than twice of sampling rate, theoretically we could have more sampled points, less quantization errors and thus less noise?

Oversampling gets us into an entire new area of discussion. The Wikipedea link is a nice quick overview, but it gets deeper than this as we swiftly get into noise shaping. Sampling at twice the Nyquist rate can trivially get us one additional bit of resolution. But that is only of benefit if there is actual signal to be found and if there is approriate dither present. Otherwise it gets you nothing. It is also about the most inefficient way of getting another bit resolution around. Which is where noise shaping comes in. Internal to ADCs and DACs appropriate use of oversampling is commonly used to get the needed performance. Outside that realm there is no justification.Oversampling (twice the desired bandwidth) is beneficial though

Thanks, a really good educating video. I realized a lot. Now I need to dig into a bandwidth limited signal term, didn't fully understand...

Regarding quantization, this process "joins" the sampled points of signal amplitudes, smoothes them to reconstruct analog wave, but it produces a noise (difference between an input value and its quantized value, or quantization error). Now here comes a logical question, if we use more than twice of sampling rate, theoretically we could have more sampled points, less quantization errors and thus less noise?

"Joins the points" is a loaded phrase. As is the word "smoothes". I would avoid any notion of joining the points. A filter is applied to the samples, and that filter has a very specific mathematical property such that the resultant waveform is the sum of a series of sinc functions. Within the defined channel characteristics that sum of functions is indistinguishable from the source signal.

More sampling can't reduce the quantisation error by itself. The quantiser is defined by its LSB. But in the presence of correct dither the more samples you take you can resolve more. As above, double the sample rate, ensure your dither is good, and you get one additional bit. This is a terrible way of getting just an additional bit, it cost you a doubling in information rate. So in a 16 bit channel you got a best a 6.25% improvement in bit depth for 100% more data. Which is pretty awful. Best to just use a DAC that has that additional resolution and encode the bits together. Justifying higher samples rates this way isn't much value.

Newman

Major Contributor

- Joined

- Jan 6, 2017

- Messages

- 3,503

- Likes

- 4,329

I understand cymbal crashes have significant ultrasonic overtones, but am I right in saying the recording system as well as our ears, basically captures the in-band intermodulation effects of these ultrasonic tones, so we don't really need to have the original recorded? - Hope that makes sense

Confirmed.

Similar threads

- Replies

- 20

- Views

- 1K

- Replies

- 58

- Views

- 4K

- Replies

- 676

- Views

- 71K

- Replies

- 10

- Views

- 816